I left frontend for SDET, then came back

Have you ever been afraid to SSH into a Linux machine to retrieve some logs, or, God forbid, restart a failed service?

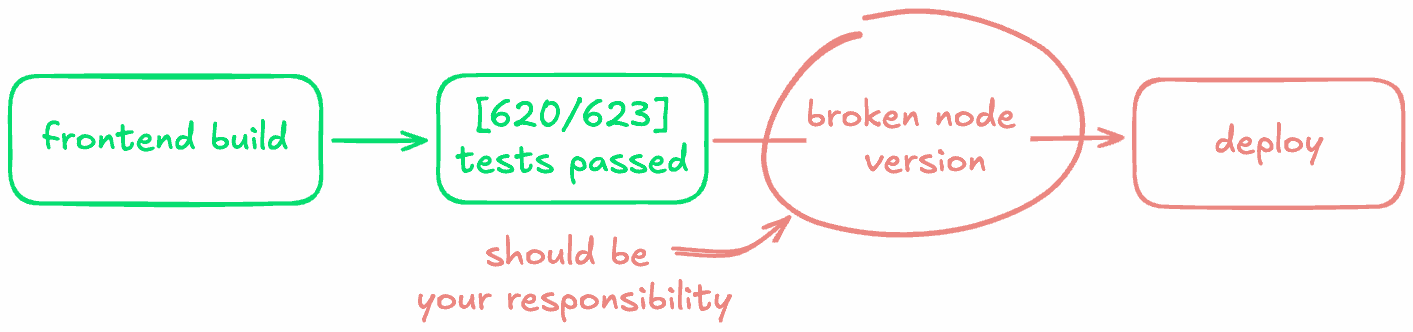

Many of us live in the safe, local world of our IDEs and fancy, chromium-based browsers, with no need to escape from our comfort zones. I used to think that there was no point in challenging myself with CI issues when I could just wait for someone else to fix them. The code works locally. "80% coverage" AI-written tests pass. Whatever happens next isn't my problem, right? Now, I would argue otherwise.

How & Why I switched to SDET

During my React premature-optimization era, a recruiter reached out to me with a role I was skeptical about. It was an emerging test automation team, so I'd be writing deployment configs, working with CI, and building E2E tests from scratch. After a few interviews, I realized my reluctance wasn't really about the role.

It was fear.

Fear of not being able to catch up with so many things that were new to me. Things I'd spent years avoiding would suddenly become my responsibility.

Then, there's that cliché interview question: "Why should we hire you?" I never had a good answer. I could talk about reusable components and cutting-edge tech, but so could everyone else.

The fact is, the SDET role scared me — that's exactly why I took it.

Being a SDET

The first thing you notice after becoming an SDET is that nobody really cares about you or your tests. Your work is either invisible or gets a quick "wow, great job," which is forgotten about the next day. Compared to highly visible frontend work, that sucks.

The difference is simple: a few hours spent on a new button or dialog produces something tangible you can immediately show. Testing doesn't work that way. You can spend months writing hundreds of tests, and unless you actively make them reliable and easy to use, nobody notices they exist at all.

Fighting for visibility

First of all, why do test results need visibility? The answer is simple: it is so failures get fixed quickly instead of sitting in the backlog. Failed tests are an accumulating trap, like a snowball rolling downhill — the more of them there are, the harder it becomes to stay sane. Over time, excessive failures further reduce visibility: once everything is red, the tests become little more than a waste of CPU cycles.

When a team reaches the point where the test results are reviewed on every run, testing stops being noise and becomes a representation of a system's state.

How to achieve higher visibility

- Camouflage the tests

This might sound silly, but I've found it surprisingly effective. The idea is simple: make the tests behave like a real QA would. Our system automatically creates bugs with screenshots and replication steps, then assigns them to the dev team lead. Once the developer fixes the bug, they can mark it as resolved, and our test runner will either close the bug or reopen it with an updated description if the issue persists. - Minimize the bugs

Camouflaging tests is effective, but they can quickly lose their impact if too many bugs flood the system. With a constantly changing UI, some test failures are inevitable, but there are ways to reduce noise and keep bug reports minimal:- Dependency-aware execution: Every test has a list of "parent" tests (yes, we deliberately chose what's typically considered a bad practice in testing). For example, if the login fails, there's no point in running the remaining tests because they're guaranteed to fail. This speeds up execution and generates substantially fewer bugs. The same system applies when the site is down; we simply skip everything.

- Capping repeated failures: If the same error appears multiple times, we only log a limited number of bugs for it. For instance, if a random error dialog pops up, only 8 bugs are created instead of dozens.

- Keep moving

Always find new ways to improve or replace parts of the current workflow. Do you have problems with debugging failed deploys? Maybe you could write a quick parser of the logs and attach those to your reporting system. You can even vibe-code those.

Pragmatic approach to tests

Once I started writing tests full-time, I had all the time in the world to make my scenarios as sophisticated as possible. Did it help? No. I found that simpler tests bring higher ROI. They're easier to write, break less often, and build trust in the suite over time. You might argue that simple tests miss edge cases. In practice, clever tests harm the reputation of the reliable tests and are eventually closed with "By Design."

SDET should be resilient

Do you know the main enemy of reliable tests? Flaky environments. An SDET has to ensure that the deployments are stable; if current deployment pipelines are consistently failing, your tests are slowly rotting.

Please make sure to write your own pipelines, or at least some wrappers, to guarantee a reliable schedule!

Reduce friction

Be proactive when collaborating with other devs, treat them as your "customers". Always try to make their lives simpler; by doing so, you are one step closer to making tests a norm in the development workflow.

Have meaningful error messages

What failed? Why? Nobody is reading that, sorry.

Generic assertion with no context.

Clear context and action.

Your error messages should tell you what failed and, ideally, make it obvious.

Coming back

The decision

I'd been working as an SDET for more than a year. Even though it gave me life-changing lessons on how to approach my work, I still decided to switch back to frontend at the same company. The main reason is that the deeper you go, the more you build project-specific knowledge that doesn't transfer. The lessons got smaller.

Honestly, I just missed the frontend, and now I'm no longer afraid to do some nasty CI stuff.

The move

Remember when I said broken pipelines should be your responsibility? It became clear to me that the resilience part applies not only to SDETs but also to all devs.

The noticeable shift is that I no longer ignore failed CI. I check why something broke instead of waiting for someone else to notice. You won't always be able to fix it without proper DevOps knowledge, but it's worth learning — be the dev who actually cares!

Writing a lot of tests changed how I write code. I pay more attention to edge cases and structure things so they're easy to test. For example, when using React, I now extract as much logic into plain JS functions as possible — no hooks, no components, no worrying about shallow vs. full DOM rendering.

These days, good test titles might be all that's needed. AI can usually write good enough tests. But AI isn't yet great at knowing what to test. The scenarios still have to come from you.

Conclusion

Should every frontend dev do the same? Probably not.

But find the thing that scares you. The thing you've been hoping someone else will handle. That's your version of this. It has also finally given me a real answer to that stupid interview question.

Hit me up on X with the links below if you want to discuss pretty much anything.

Thank you for reading!